Библиотека сайта rus-linux.net

The book is available and called simply "Understanding The Linux Virtual Memory Manager". There is a lot of additional material in the book that is not available here, including details on later 2.4 kernels, introductions to 2.6, a whole new chapter on the shared memory filesystem, coverage of TLB management, a lot more code commentary, countless other additions and clarifications and a CD with lots of cool stuff on it. This material (although now dated and lacking in comparison to the book) will remain available although I obviously encourge you to buy the book from your favourite book store :-) . As the book is under the Bruce Perens Open Book Series, it will be available 90 days after appearing on the book shelves which means it is not available right now. When it is available, it will be downloadable from http://www.phptr.com/perens so check there for more information.

To be fully clear, this webpage is not the actual book.

Next: 5.4 Memory Regions Up: 5. Process Address Space Previous: 5.2 Managing the Address Contents Index

Subsections

5.3 Process Address Space Descriptor

The process address space is described by the mm_struct

struct meaning that only one exists for each process and is shared

between threads. In fact, threads are identified in the task list by

finding all task_structs which have pointers to the same

mm_struct.

A unique mm_struct is not needed for kernel threads as they

will never page fault or access the userspace portion5.6. This results in the task_struct![]()

mm

field for kernel threads always being NULL. For some tasks such as the boot

idle task, the mm_struct is never setup but for kernel threads,

a call to daemonize() will call exit_mm() to decrement

the usage counter.

As Translation Lookaside Buffer (TLB) flushes are extremely

expensive, especially with architectures such as the PPC, a technique called

lazy TLB is employed which avoids unnecessary TLB flushes by

processes which do not access the userspace page tables5.7. The call

to switch_mm(), which results in a TLB flush, is avoided by

``borrowing'' the mm_struct used by the previous task and

placing it in task_struct![]()

active_mm. This technique

has made large improvements to context switches times.

When entering lazy TLB, the function enter_lazy_tlb() is called

to ensure that a mm_struct is not shared between processors

in SMP machines, making it a NULL operation on UP machines. The second time

use of lazy TLB is during process exit when start_lazy_tlb()

is used briefly while the process is waiting to be reaped by the parent.

The struct has two reference counts called mm_users and

mm_count for two types of ``users''. mm_users

is a reference count of processes accessing the userspace portion of this

mm_struct, such as the page tables and file mappings. Threads

and the swap_out() code for instance will increment this count

making sure a mm_struct is not destroyed early. When it drops

to 0, exit_mmap() will delete all mappings and tear down the page

tables before decrementing the mm_count.

mm_count is a reference count of the ``anonymous users''

for the mm_struct initialised at 1 for the ``real'' user. An

anonymous user is one that does not necessarily care about the userspace

portion and is just borrowing the mm_struct. Example users

are kernel threads which use lazy TLB switching. When this count drops to 0,

the mm_struct can be safely destroyed. Both reference counts

exist because anonymous users need the mm_struct to exist even

if the userspace mappings get destroyed and there is no point delaying the

teardown of the page tables.

The mm_struct is defined in ![]()

linux/sched.h![]() as follows:

as follows:

210 struct mm_struct {

211 struct vm_area_struct * mmap;

212 rb_root_t mm_rb;

213 struct vm_area_struct * mmap_cache;

214 pgd_t * pgd;

215 atomic_t mm_users;

216 atomic_t mm_count;

217 int map_count;

218 struct rw_semaphore mmap_sem;

219 spinlock_t page_table_lock;

220

221 struct list_head mmlist;

222

226 unsigned long start_code, end_code, start_data, end_data;

227 unsigned long start_brk, brk, start_stack;

228 unsigned long arg_start, arg_end, env_start, env_end;

229 unsigned long rss, total_vm, locked_vm;

230 unsigned long def_flags;

231 unsigned long cpu_vm_mask;

232 unsigned long swap_address;

233

234 unsigned dumpable:1;

235

236 /* Architecture-specific MM context */

237 mm_context_t context;

238 };

The meaning of each of the field in this sizeable struct is as follows:

- mmap The head of a linked list of all VMA regions in the address space;

- mm_rb The VMAs are arranged in a linked list and in a red-black

tree for fast lookups. This is the root of the tree;

- mmap_cache The VMA found during the last call to

find_vma()is stored in this field on the assumption that the area will be used again soon; - pgd The Page Global Directory for this process;

- mm_users A reference count of users accessing the userspace portion

of the address space as explained at the beginning of the section;

- mm_count A reference count of the anonymous users for the

mm_structstarting at 1 for the ``real'' user as explained at the beginning of this section; - map_count Number of VMAs in use;

- mmap_sem This is a long lived lock which protects the VMA list

for readers and writers. As users of this lock require it for a long time

and may need to sleep, a spinlock is inappropriate. A reader of the list

takes this semaphore with

down_read(). If they need to write, it is taken withdown_write()and thepage_table_lockspinlock is later acquired while the VMA linked lists are being updated; - page_table_lock This protects most fields on the

mm_struct. As well as the page tables, it protects the RSS (see below) count and the VMA from modification; - mmlist All

mm_structs are linked together via this field; - start_code, end_code The start and end address of the code section;

- start_data, end_data The start and end address of the data section;

- start_brk, brk The start and end address of the heap;

- start_stack Predictably enough, the start of the stack region;

- arg_start, arg_end The start and end address of command line

arguments;

- env_start, env_end The start and end address of environment variables;

- rss Resident Set Size (RSS) is the number of resident

pages for this process;

- total_vm The total memory space occupied by all VMA regions in

the process;

- locked_vm The number of resident pages locked in memory;

- def_flags Only one possible value,

VM_LOCKED. It is used to determine if all future mappings are locked by default or not; - cpu_vm_mask A bitmask representing all possible CPUs in an SMP

system. The mask is used by an InterProcessor Interrupt (IPI)

to determine if a processor should execute a particular function or not. This

is important during TLB flush for each CPU;

- swap_address Used by the pageout daemon to record the last address

that was swapped from when swapping out entire processes;

- dumpable Set by

prctl(), this flag is important only when tracing a process; - context Architecture specific MMU context.

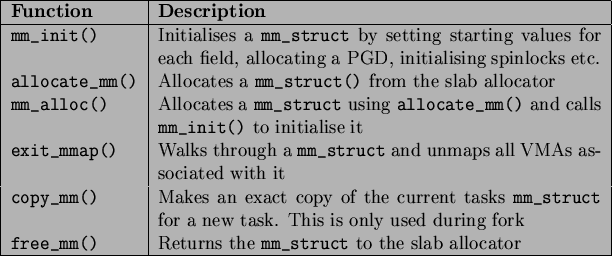

There are a small number of functions for dealing with

mm_structs. They are described in Table 5.2.

5.3.1 Allocating a Descriptor

Two functions are provided to allocate a mm_struct. To be

slightly confusing, they are essentially the same but with small important

differences. allocate_mm() is just a preprocessor macro which

allocates a mm_struct from the slab allocator (see

Chapter 9). mm_alloc() allocates from

slab and then calls mm_init() to initialise it.

5.3.2 Initialising a Descriptor

The first mm_struct in the system that is initialised is called

init_mm(). As all subsequent mm_struct's are copies,

the first one has to be statically initialised at compile time. This static

initialisation is performed by the macro INIT_MM().

242 #define INIT_MM(name) \

243 { \

244 mm_rb: RB_ROOT, \

245 pgd: swapper_pg_dir, \

246 mm_users: ATOMIC_INIT(2), \

247 mm_count: ATOMIC_INIT(1), \

248 mmap_sem: __RWSEM_INITIALIZER(name.mmap_sem), \

249 page_table_lock: SPIN_LOCK_UNLOCKED, \

250 mmlist: LIST_HEAD_INIT(name.mmlist), \

251 }

Once it is established, new mm_structs are created using their

parent mm_struct as a template. The function responsible for

the copy operation is copy_mm() and it uses init_mm()

to initialise process specific fields.

5.3.3 Destroying a Descriptor

While a new user increments the usage count with

atomic_inc(&mm->mm_users), it is decremented with a call

to mmput(). If the mm_users count reaches zero, all

the mapped regions are destroyed with exit_mmap() and the page

tables destroyed as there are no longer any users of the userspace portions. The

mm_count count is decremented with mmdrop() as all the

users of the page tables and VMAs are counted as one mm_struct

user. When mm_count reaches zero, the mm_struct

will be destroyed.

Footnotes

- ... portion5.6

- The only exception is faulting in vmalloc space for updating the current page tables against the master page table which is treated as a special case by the page fault handling code.

- ... tables5.7

- Remember that the kernel portion of the address space is always visible.

Next: 5.4 Memory Regions Up: 5. Process Address Space Previous: 5.2 Managing the Address Contents Index Mel 2004-02-15