Библиотека сайта rus-linux.net

The book is available and called simply "Understanding The Linux Virtual Memory Manager". There is a lot of additional material in the book that is not available here, including details on later 2.4 kernels, introductions to 2.6, a whole new chapter on the shared memory filesystem, coverage of TLB management, a lot more code commentary, countless other additions and clarifications and a CD with lots of cool stuff on it. This material (although now dated and lacking in comparison to the book) will remain available although I obviously encourge you to buy the book from your favourite book store :-) . As the book is under the Bruce Perens Open Book Series, it will be available 90 days after appearing on the book shelves which means it is not available right now. When it is available, it will be downloadable from http://www.phptr.com/perens so check there for more information.

To be fully clear, this webpage is not the actual book.

Next: 5.5 Exception Handling Up: 5. Process Address Space Previous: 5.3 Process Address Space Contents Index

Subsections

- 5.4.1 File/Device backed memory regions

- 5.4.2 Creating A Memory Region

- 5.4.3 Finding a Mapped Memory Region

- 5.4.4 Finding a Free Memory Region

- 5.4.5 Inserting a memory region

- 5.4.6 Merging contiguous regions

- 5.4.7 Remapping and moving a memory region

- 5.4.8 Locking a Memory Region

- 5.4.9 Unlocking the region

- 5.4.10 Fixing up regions after locking

- 5.4.11 Deleting a memory region

- 5.4.12 Deleting all memory regions

5.4 Memory Regions

The full address space of a process is rarely used, only sparse regions are.

Each region is represented by a vm_area_struct which

never overlap and represent a set of addresses with the same protection

and purpose. Examples of a region include a read-only shared library loaded

into the address space or the process heap. A full list of mapped regions a

process has may be viewed via the proc interface at /proc/PID/maps

where PID is the process ID of the process that is to be examined.

The region may have a number of different structures associated with it as

illustrated in Figure 5.2.

At the top, there is the vm_area_struct which on its own is

enough to represent anonymous memory.

If a file is memory mapped, the struct file is available through the

vm_file field which has a pointer to the struct

inode. The inode is used to get the struct address_space which

has all the private information about the file including a set of pointers

to filesystem functions which perform the filesystem specific operations

such as reading and writing pages to disk.

The struct vm_area_struct is declared as follows in

![]()

linux/mm.h![]() :

:

44 struct vm_area_struct {

45 struct mm_struct * vm_mm;

46 unsigned long vm_start;

47 unsigned long vm_end;

49

50 /* linked list of VM areas per task, sorted by address */

51 struct vm_area_struct *vm_next;

52

53 pgprot_t vm_page_prot;

54 unsigned long vm_flags;

55

56 rb_node_t vm_rb;

57

63 struct vm_area_struct *vm_next_share;

64 struct vm_area_struct **vm_pprev_share;

65

66 /* Function pointers to deal with this struct. */

67 struct vm_operations_struct * vm_ops;

68

69 /* Information about our backing store: */

70 unsigned long vm_pgoff;

72 struct file * vm_file;

73 unsigned long vm_raend;

74 void * vm_private_data;

75 };

- vm_mm The

mm_structthis VMA belongs to; - vm_start The starting address of the region;

- vm_end The end address of the region;

- vm_next All the VMAs in an address space are linked together in an

address-ordered singly linked list via this field;

- vm_page_prot The protection flags that are set for each PTE in this

VMA. The different bits are described in Table 4.1;

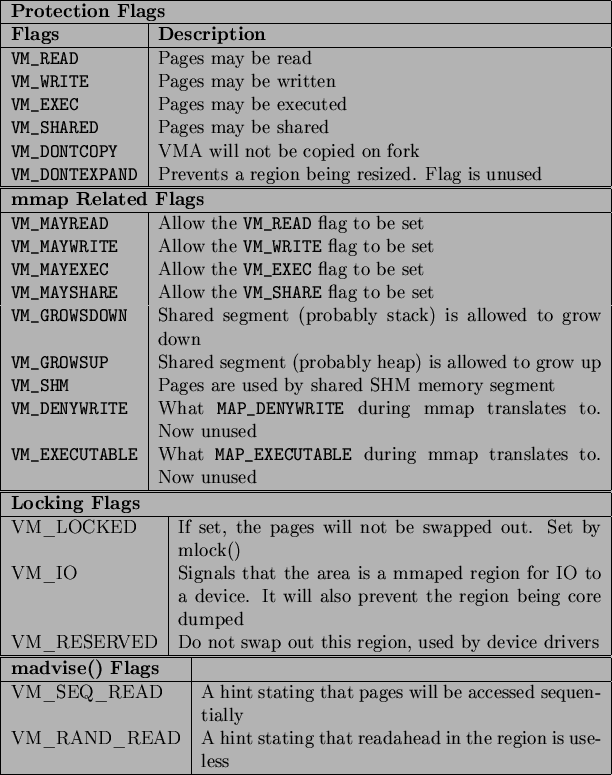

- vm_flags A set of flags describing the protections and properties of

the VMA. They are all defined in

linux/mm.h and are described

in Table 5.3

and are described

in Table 5.3

- vm_rb As well as being in a linked list, all the VMAs are stored on a

red-black tree for fast lookups. This is important for page fault

handling when finding the correct region quickly is important, especially

for a large number of mapped regions;

- vm_next_share Shared VMA regions based on file mappings (such as

shared libraries) linked together with this field;

- vm_pprev_share The complement of

vm_next_share; - vm_ops The

vm_opsfield contains functions pointers foropen(),close()andnopage(). These are needed for syncing with information from the disk; - vm_pgoff This is the page aligned offset within a file that is

memory mapped;

- vm_file The struct file pointer to the file being mapped;

- vm_raend This is the end address of a read-ahead window. When a fault

occurs, a number of additional pages after the desired page will be paged in.

This field determines how many additional pages are faulted in;

- vm_private_data Used by some device drivers to store private

information. Not of concern to the memory manager.

All the regions are linked together on a linked list ordered by address via

the vm_next field. When searching for a free area, it is a simple

matter of traversing the list but a frequent operation is to search for the

VMA for a particular address such as during page faulting for example. In this

case, the red-black tree is traversed as it has

![]() search time on average. The tree is ordered so that lower addresses than

the current node are on the left leaf and higher addresses are on the right.

search time on average. The tree is ordered so that lower addresses than

the current node are on the left leaf and higher addresses are on the right.

5.4.1 File/Device backed memory regions

In the event the region is backed by a file, the vm_file leads

to an associated address_space as shown in Figure 5.2. The struct contains information

of relevance to the filesystem such as the number of dirty pages which must

be flushed to disk. It is declared as follows in ![]()

linux/fs.h![]() :

:

401 struct address_space {

402 struct list_head clean_pages;

403 struct list_head dirty_pages;

404 struct list_head locked_pages;

405 unsigned long nrpages;

406 struct address_space_operations *a_ops;

407 struct inode *host;

408 struct vm_area_struct *i_mmap;

409 struct vm_area_struct *i_mmap_shared;

410 spinlock_t i_shared_lock;

411 int gfp_mask;

412 };

A brief description of each field is as follows:

- clean_pages A list of clean pages which do not have to be synchronised with the disk;

- dirty_pages Pages that the process has touched and need to by sync-ed with the backing storage;

- locked_pages The list of pages locked in memory;

- nrpages Number of resident pages in use by the address space;

- a_ops A struct of function pointers within the filesystem;

- host The host inode the file belongs to;

- i_mmap A pointer to the VMA the address space is part of;

- i_mmap_shared A pointer to the next VMA which shares this address space;

- i_shared_lock A spinlock to protect this structure;

- gfp_mask The mask to use when calling

__alloc_pages()for new pages.

Periodically the memory manager will need to flush information to disk. The

memory manager doesn't know and doesn't care how information is written

to disk, so the a_ops struct is used to call the relevant

functions. It is defined as follows in ![]()

linux/fs.h![]() :

:

383 struct address_space_operations {

384 int (*writepage)(struct page *);

385 int (*readpage)(struct file *, struct page *);

386 int (*sync_page)(struct page *);

387 /*

388 * ext3 requires that a successful prepare_write()

* call be followed

389 * by a commit_write() call - they must be balanced

390 */

391 int (*prepare_write)(struct file *, struct page *,

unsigned, unsigned);

392 int (*commit_write)(struct file *, struct page *,

unsigned, unsigned);

393 /* Unfortunately this kludge is needed for FIBMAP.

* Don't use it */

394 int (*bmap)(struct address_space *, long);

395 int (*flushpage) (struct page *, unsigned long);

396 int (*releasepage) (struct page *, int);

397 #define KERNEL_HAS_O_DIRECT

398 int (*direct_IO)(int, struct inode *, struct kiobuf *,

unsigned long, int);

399 };

These fields are all function pointers which are described as follows;

- writepage Write a page to disk. The offset within the file to write

to is stored within the page struct. It is up to the filesystem specific

code to find the block. See

buffer.c:block_write_full_page(); - readpage Read a page from disk. See

buffer.c:block_read_full_page(); - sync_page Sync a dirty page with disk. See

buffer.c:block_sync_page(); - prepare_write This is called before data is copied from userspace

into a page that will be written to disk. With a journaled filesystem,

this ensures the filesystem log is up to date. With normal filesystems,

it makes sure the needed buffer pages are allocated. See

buffer.c:block_prepare_write(); - commit_write After the data has been copied from userspace,

this function is called to commit the information to disk. See

buffer.c:block_commit_write(); - bmap Maps a block so that raw IO can be performed. Only of concern

to the filesystem specific code;

- flushpage This makes sure there is no IO pending on a page before

releasing it. See

buffer.c:discard_bh_page(); - releasepage This tries to flush all the buffers associated with a

page before freeing the page itself. See

try_to_free_buffers().

5.4.2 Creating A Memory Region

The system call mmap() is provided for creating new

memory regions within a process. For the x86, the function calls

sys_mmap2() which calls do_mmap2() directly with

the same parameters. do_mmap2() is responsible for acquiring the

parameters needed by do_mmap_pgoff(), which is the principle

function for creating new areas for all architectures.

do_mmap2() first clears the MAP_DENYWRITE and

MAP_EXECUTABLE bits from the flags parameter as they

are ignored by Linux, which is confirmed by the mmap() manual page. If

a file is being mapped, do_mmap2() will look up the

struct file based on the file descriptor passed as a parameter and

acquire the mm_struct![]()

mmap_sem semaphore before calling

do_mmap_pgoff().

do_mmap_pgoff() begins by performing some basic sanity checks. It

first checks that the appropriate filesystem or device functions are available

if a file or device is being mapped. It then ensures the size of the mapping

is page aligned and that it does not attempt to create a mapping in the

kernel portion of the address space. It then makes sure the size of the

mapping does not overflow the range of pgoff and finally that the

process does not have too many mapped regions already.

This rest of the function is large but broadly speaking it takes the following steps:

- Sanity check the parameters;

- Find a free linear address space large enough for the memory mapping. If

a filesystem or device specific

get_unmapped_area()function is provided, it will be used otherwisearch_get_unmapped_area()is called; - Calculate the VM flags and check them against the file access permissions;

- If an old area exists where the mapping is to take place, fix it up so that it is suitable for the new mapping;

- Allocate a

vm_area_structfrom the slab allocator and fill in its entries; - Link in the new VMA;

- Call the filesystem or device specific mmap function;

- Update statistics and return.

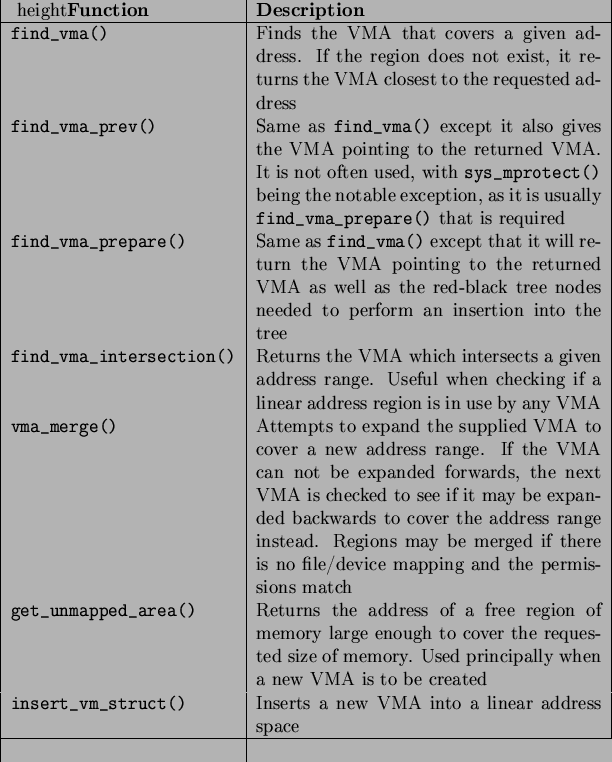

5.4.3 Finding a Mapped Memory Region

A common operation is to find the VMA a particular address belongs to,

such as during operations like page faulting, and the function responsible

for this is find_vma(). The function find_vma() and

other API functions affecting memory regions are listed in Table 5.3.

It first checks the mmap_cache field which caches the result of

the last call to find_vma() as it is quite likely the same region

will be needed a few times in succession. If it is not the desired region,

the red-black tree stored in the mm_rb field is traversed. If

the desired address is not contained within any VMA, the function will return

the VMA closest to the requested address so it is important callers

double check to ensure the returned VMA contains the desired address.

A second function called find_vma_prev() is provided which is

functionally the same as find_vma() except that it also returns a

pointer to the VMA preceding the desired VMA5.8which is required as the list is a singly linked list. This is rarely used

but notably, it is used when two VMAs are being compared to determine if

they may be merged. It is also used when removing a memory region so that

the singly linked list may be updated.

The last function of note for searching VMAs is

find_vma_intersection() which is used to find a VMA which

overlaps a given address range. The most notable use of this is during a

call to do_brk() when a region is growing up. It is important to

ensure that the growing region will not overlap an old region.

5.4.4 Finding a Free Memory Region

When a new area is to be memory mapped, a free region has to be found that

is large enough to contain the new mapping. The function responsible for

finding a free area is get_unmapped_area().

As the call graph in Figure 5.5 indicates, there is

little work involved with finding an unmapped area. The function is passed a

number of parameters. A struct file is passed representing the

file or device to be mapped as well as pgoff which is the offset

within the file that is been mapped. The requested address for

the mapping is passed as well as its length. The last parameter

is the protection flags for the area.

If a device is being mapped, such as a video card, the associated

f_op![]()

get_unmapped_area() is used. This is

because devices or files may have additional requirements for mapping that

generic code can not be aware of, such as the address having to be aligned

to a particular virtual address.

If there are no special requirements, the architecture specific function

arch_get_unmapped_area() is called. Not all

architectures provide their own function. For those that don't, there is a

generic function provided in mm/mmap.c.

5.4.5 Inserting a memory region

The principal function for inserting a new memory region is

insert_vm_struct() whose call graph can be seen in Figure

5.6. It is a very simple function which first calls

find_vma_prepare() to find the appropriate VMAs the new region is

to be inserted between and the correct nodes within the red-black tree. It then

calls __vma_link() to do the work of linking in the new VMA.

The function insert_vm_struct() is rarely used as it does not

increase the map_count field. Instead, the function commonly

used is __insert_vm_struct() which performs the same tasks

except that it increments map_count.

Two varieties of linking functions are provided, vma_link()

and __vma_link(). vma_link() is intended for use when

no locks are held. It will acquire all the necessary locks, including locking

the file if the VMA is a file mapping before calling __vma_link()

which places the VMA in the relevant lists.

It is important to note that many functions do not use the

insert_vm_struct() functions but instead prefer to

call find_vma_prepare() themselves followed by a later

vma_link() to avoid having to traverse the tree multiple times.

The linking in __vma_link() consists of three stages which are

contained in three separate functions. __vma_link_list() inserts

the VMA into the linear, singly linked list. If it is the first mapping in

the address space (i.e. prev is NULL), it will become the red-black tree

root node. The second stage is linking the node into the red-black tree

with __vma_link_rb(). The final stage is fixing up the file

share mapping with __vma_link_file() which basically inserts

the VMA into the linked list of VMAs via the vm_pprev_share()

and vm_next_share() fields.

5.4.6 Merging contiguous regions

Linux used to have a function called

merge_segments() [#!kernel-2-2!#] which was responsible for

merging adjacent regions of memory together if the file and permissions

matched. The objective was to remove the number of VMAs required, especially

as many operations resulted in a number of mappings being created such as

calls to sys_mprotect(). This was an expensive operation as it

could result in large portions of the mappings being traversed and was later

removed as applications, especially those with many mappings, spent a long

time in merge_segments().

The equivalent function which exists now is called vma_merge()

and it is only used in two places. The first user is sys_mmap()

which calls it if an anonymous region is being mapped, as anonymous regions

are frequently mergable. The second time is during do_brk() which is

expanding one region into a newly allocated one where the two regions should

be merged. Rather than merging two regions, the function vma_merge()

checks if an existing region may be expanded to satisfy the new allocation

negating the need to create a new region. A region may be expanded if there are

no file or device mappings and the permissions of the two areas are the same.

Regions are merged elsewhere, although no function is explicitly called to

perform the merging. The first is during a call to sys_mprotect()

during the fixup of areas where the two regions will be merged if the two

sets of permissions are the same after the permissions in the affected region

change. The second is during a call to move_vma() when it is

likely that similar regions will be located beside each other.

5.4.7 Remapping and moving a memory region

mremap() is a system call provided to grow or shrink an existing

memory mapping. This is implemented by the function sys_mremap()

which may move a memory region if it is growing or it would overlap another

region and MREMAP_FIXED is not specified in the flags. The

call graph is illustrated in Figure 5.7.

If a region is to be moved, do_mremap() first calls

get_unmapped_area() to find a region large enough to contain the

new resized mapping and then calls move_vma() to move the old

VMA to the new location. See Figure 5.8 for the call graph

to move_vma().

First move_vma() checks if the new location may be merged with the

VMAs adjacent to the new location. If they can not be merged, a new VMA is

allocated literally one PTE at a time. Next move_page_tables()

is called(see Figure 5.9 for its call graph) which

copies all the page table entries from the old mapping to the new one. While

there may be better ways to move the page tables, this method makes error

recovery trivial as backtracking is relatively straight forward.

The contents of the pages are not copied. Instead, zap_page_range()

is called to swap out or remove all the pages from the old mapping and the

normal page fault handling code will swap the pages back in from backing

storage or from files or will call the device specific do_nopage()

function.

5.4.8 Locking a Memory Region

Linux can lock pages from an address range into memory via the system call

mlock() which is implemented by sys_mlock() whose call

graph is shown in Figure 5.10. At a high level, the function

is simple; it creates a VMA for the address range to be locked, sets the

VM_LOCKED flag on it and forces all the pages to be present with

make_pages_present(). A second system call mlockall()

which maps to sys_mlockall() is also provided which is a

simple extension to do the same work as sys_mlock() except for

every VMA on the calling process. Both functions rely on the core function

do_mlock() to perform the real work of finding the affected VMAs

and deciding what function is needed to fix up the regions as described later.

There are some limitations to what memory may be locked. The address range

must be page aligned as VMAs are page aligned. This is addressed by simply

rounding the range up to the nearest page aligned range. The second proviso

is that the process limit RLIMIT_MLOCK imposed by the system

administrator may not be exceeded. The last proviso is that each process may

only lock half of physical memory at a time. This is a bit non-functional as

there is nothing to stop a process forking a number of times and each child

locking a portion but as only root processes are allowed to lock pages, it does

not make much difference. It is safe to presume that a root process is trusted

and knows what it is doing. If it does not, the system administrator with the

resulting broken system probably deserves it and gets to keep both parts of it.

5.4.9 Unlocking the region

The system calls munlock() and munlockall() provide the

corollary for the locking functions and map to sys_munlock()

and sys_munlockall() respectively. The functions are much simpler

than the locking functions as they do not have to make numerous checks. They

both rely on the same do_mmap() function to fix up the regions.

5.4.10 Fixing up regions after locking

When locking or unlocking, VMAs will be affected in one of four ways, each of

which must be fixed up by mlock_fixup(). The locking may affect

the whole VMA in which case mlock_fixup_all() is called. The

second condition, handled by mlock_fixup_start(), is where the

start of the region is locked, requiring that a new VMA be allocated to map the

new area. The third condition, handled by mlock_fixup_end(),

is predictably enough where the end of the region is locked. Finally,

mlock_fixup_middle() handles the case where the middle of a

region is mapped requiring two new VMAs to be allocated.

It is interesting to note that VMAs created as a result of locking are never merged, even when unlocked. It is presumed that processes which lock regions will need to lock the same regions over and over again and it is not worth the processor power to constantly merge and split regions.

5.4.11 Deleting a memory region

The function responsible for deleting memory regions or parts thereof is

do_munmap(). It is a relatively simple operation in comparison

to the other memory region related operations and is basically divided up

into three parts. The first is to fix up the red-black tree for the region

that is about to be unmapped. The second is to release the pages and PTEs

related to the region to be unmapped and the third is to fix up the regions

if a hole has been generated.

To ensure the red-black tree is ordered correctly, all VMAs to be affected by

the unmap are placed on a linked list called free and then deleted

from the red-black tree with rb_erase(). The regions if they still

exist will be added with their new addresses later during the fixup.

Next the linked list VMAs on free is walked through and checked

to ensure it is not a partial unmapping. Even if a region is just to be

partially unmapped, remove_shared_vm_struct() is still called

to remove the shared file mapping. Again, if this is a partial unmapping,

it will be recreated during fixup. zap_page_range() is called to

remove all the pages associated with the region about to be unmapped before

unmap_fixup() is called to handle partial unmappings.

Lastly free_pgtables() is called to try and free up all the

page table entries associated with the unmapped region. It is important

to note that the page table entry freeing is not exhaustive. It will only

unmap full PGD directories and their entries so for example, if only half a

PGD was used for the mapping, no page table entries will be freed. This is

because a finer grained freeing of page table entries would be too expensive

to free up data structures that are both small and likely to be used again.

5.4.12 Deleting all memory regions

During process exit, it is necessary to unmap all VMAs associated with a

mm_struct. The function responsible is exit_mmap(). It

is a very simply function which flushes the CPU cache before walking through

the linked list of VMAs, unmapping each of them in turn and freeing up

the associated pages before flushing the TLB and deleting the page table

entries. It is covered in detail in the Code Commentary.

Footnotes

- ... VMA5.8

- The VMA list is one of the very rare cases where a singly linked list is used in the kernel.

Next: 5.5 Exception Handling Up: 5. Process Address Space Previous: 5.3 Process Address Space Contents Index Mel 2004-02-15

![\includegraphics[width=15cm]{graphs/process.ps}](/MyLDP/BOOKS/VirtMem/img21.png)

![\includegraphics[width=5cm]{graphs/get_unmapped_area.ps}](/MyLDP/BOOKS/VirtMem/img26.png)

![\includegraphics[width=11cm]{graphs/insert_vm_struct.ps}](/MyLDP/BOOKS/VirtMem/img27.png)

![\includegraphics[width=17cm]{graphs/sys_mremap.ps}](/MyLDP/BOOKS/VirtMem/img28.png)

![\includegraphics[width=17cm]{graphs/move_vma.ps}](/MyLDP/BOOKS/VirtMem/img29.png)

![\includegraphics[width=13cm]{graphs/move_page_tables.ps}](/MyLDP/BOOKS/VirtMem/img30.png)

![\includegraphics[width=17cm]{graphs/sys_mlock.ps}](/MyLDP/BOOKS/VirtMem/img31.png)

![\includegraphics[width=17.5cm]{graphs/do_munmap.ps}](/MyLDP/BOOKS/VirtMem/img32.png)